Building a Versatile AI Agent with LangGraph, Vector Search, and Multiple LLMs

GeraDeluxer

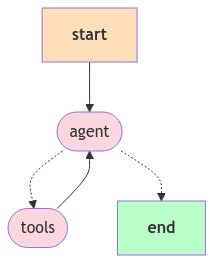

The Workflow: Using LangGraph for Coordination

The process revolves around LangGraph, a framework that creates workflows capable of branching, looping, and making decisions based on intermediate results. This setup allows agents to handle tasks that go beyond simple commands. Source code in Github.

Here's how the workflow is structured:

1const workflow = new StateGraph(StateAnnotation)

2 .addNode("agent", callModel)

3 .addNode("retrieve", toolNode)

4 .addNode("gradeDocuments", gradeDocuments)

5 .addNode("rewrite", rewrite)

6 .addNode("generate", generate)

7 .addEdge(START, "agent")

8 .addConditionalEdges("agent", shouldRetrieve)

9 .addEdge("retrieve", "gradeDocuments")

10 .addConditionalEdges("gradeDocuments", checkRelevance, { yes: "generate", no: "rewrite" })

11 .addEdge("generate", END)

12 .addEdge("rewrite", "agent");StateGraph: Defines the flow using a custom annotation,StateAnnotation.Nodes: Key tasks in the workflow:

agent: Makes decisions using the language model.retrieve: Conducts a vector search to find relevant data.gradeDocuments: Assesses how relevant retrieved documents are.rewrite: Improves user queries.generate: Produces the final output using retrieved data.

Edges: Establish connections between nodes and manage the process flow.

Conditional edges guide the workflow based on specific conditions.

Tools: Adding Functionality

LangChain provides tools for specific tasks. Here’s one example:

1const searchDocumentTool = tool(async ({ query }) => { ... }, {

2 name: "search",

3 description: "Search and return specific details about Gerardo Del Angel, like work history, certificates, or education",

4 schema: z.object({

5 query: z.string().describe("The query to use in your search."),

6 }),

7});tool(): Creates a tool object.nameanddescription: Inform the agent about the tool's purpose.schema: Defines input requirements. Here, the tool processes aquerystring.Implementation: Uses a vector store to simulate a search on documents.

Vector Search: Improving Information Retrieval

The system uses vector search to locate relevant documents:

1const vectorStore = await MemoryVectorStore.fromDocuments(

2 docSplits,

3 new OllamaEmbeddings({ model: 'bge-large' }),

4);

5const retriever = vectorStore.asRetriever({

6searchType: "mmr",

7k: 35,

8searchKwargs: {

9fetchK: 40,

10lambda: 0.5,

11},

12});

13const retrievedDocuments = await retriever.invoke(query);MemoryVectorStore: Stores document embeddings in memory.OllamaEmbeddings: Generates text embeddings using a specified model.retriever: Retrieves relevant documents with themmralgorithm.

LangGraph Node Functions: Steps in the Workflow

Key functions driving the process:

shouldRetrieveFunction

Determines whether to retrieve information:

1function shouldRetrieve(state: typeof StateAnnotation.State): string {

2const { messages } = state;

3const lastMessage = messages[messages.length - 1];

4

5if ("tool_calls" in lastMessage && Array.isArray(lastMessage.tool_calls) && lastMessage.tool_calls.length) {

6return "retrieve";

7}

8return END;

9}gradeDocumentsFunction

Grades the relevance of retrieved documents:

1async function gradeDocuments(state: typeof StateAnnotation.State) {

2const { messages } = state;

3const question = messages[0].content as string;

4const lastMessage = messages[messages.length - 1];

5

6const prompt = ChatPromptTemplate.fromTemplate(`...");

7

8const model = new ChatOllama({

9baseUrl: "http://127.0.0.1:11434",

10model: "qwen2.5-coder:14b",

11}).bindTools([tool]);

12

13const response = await model.invoke({

14question,

15context: lastMessage.content as string,

16});

17

18return { messages: [response] };

19}Other Functions:

callModel: Filters and sends user input to the model.checkRelevance: Confirms if retrieved documents meet user needs.rewrite: Refines user questions for better results.generate: Produces a final answer using relevant documents.

Flexibility with LLMs

Switching models is straightforward. For example:

1import { ChatOpenAI } from "@langchain/openai";

2

3const model = new ChatOpenAI({ model: "gpt-4o-mini" }).bindTools(tools);Install the necessary library to use other providers.

Implementation Overview

The LangGraph workflow is compiled and executed within an API handler:

1if (req.method === 'POST') {

2const { prompt } = req.body;

3

4const app = workflow.compile();

5

6const finalState = await app.invoke({

7messages: [new HumanMessage(prompt)],

8});

9

10res.status(200).json({ message: finalState.messages[finalState.messages.length - 1].content });

11}StateGraphCompilation: Prepares the workflow for execution.Input and Output: Handles user prompts and sends responses as JSON.

Key Points and Next Steps

Workflow Orchestration: LangGraph handles advanced task coordination.

Information Retrieval: Vector search boosts the system’s ability to find relevant data.

LLM Integration: Supports various models for greater adaptability.

Modularity: Encourages reusable components for specific tasks.

Possible future enhancements include:

Dynamic tool definitions.

Better session management using databases.

Experimenting with diverse LLMs.

Connecting to external data sources.

Refining logic with more conditions and loops.