Run DeepSeek Locally with Ollama

GeraDeluxer

DeepSeek is a Chinese AI company working on advanced technologies like machine learning, natural language processing, and computer vision. Its goal is to develop AI that can go beyond single-purpose applications and handle a wide range of challenges. Unlike standard AI, which is built for specific tasks, AGI has the ability to apply knowledge in different situations and solve problems in multiple areas.

DeepSeek is committed to innovation, ethical AI, and collaboration to ensure its technology has a positive impact. By advancing AGI, the company hopes to improve industries, tackle global issues, and change how people interact with AI.

We are going to go through the process step by step on how to use DeepSeek with Ollama on our local machine.

1. Install Ollama

MacOS

Download Ollama for macOS here.

Windows

Download Ollama for Windows here.

Linux

Run the following command in your terminal:

1curl -fsSL https://ollama.com/install.sh | sh2. Run the DeepSeek Model

Once Ollama is installed, you can run the DeepSeek model with:

1ollama run deepseek-r1You can also explore different model options on the Ollama models.

Available DeepSeek Variants

DeepSeek-R1-Distill-Qwen-1.5B

1ollama run deepseek-r1:1.5bDeepSeek-R1-Distill-Qwen-7B

1ollama run deepseek-r1:7bDeepSeek-R1-Distill-Llama-8B

1ollama run deepseek-r1:8b

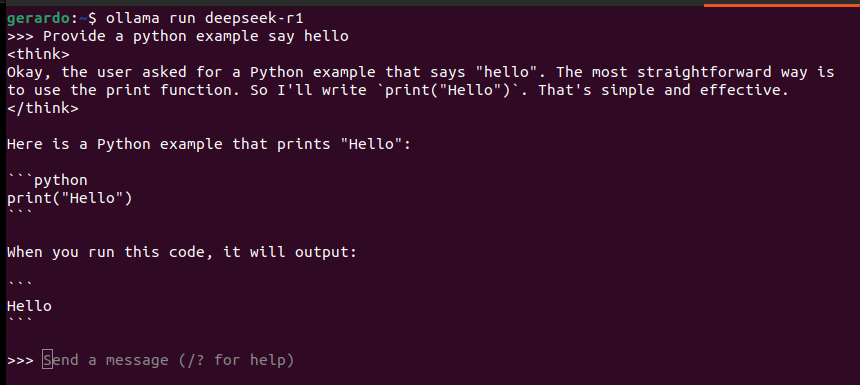

3. Test the Model

Once the model is running, you can test it by entering prompts and evaluating its responses.

Example Output:

Now you’re ready to run DeepSeek locally with Ollama! 🎉